Hark! What breaks over yonder horizon? A new paravelinc.com homepage! Paravel is entering our 12th year of business together and over the years we’ve laughed, we’ve cried, had babies, and had countless terrible fights about websites. We love what we do.

The most notable portion of this update are the words on our new Services pages. We spent a lot of time internally figuring out how to explain why we value Design Systems and Prototypes as well as the value we believe they can bring to your company. If you read anything, read those pages.

You may not have noticed my contribution, but if you sat on the homepage for a short while you may have seen a flicker around the campfire. I got the joy of going down a rabbit hole resurrecting an old animation technique on our new homepage illustration called “color cycling”.

First a Design

We commissioned a new homepage illustration from Matthew Woodson whose illustration style is incredible and intricately detailed. We were particularly smitten by his Bone Tomahawk Mondo Poster and his Kenai Fjords National Fifty-Nine Parks Poster. It’s an honor to have his art on our site.

Once we had the roughs for the homepage illustration, Reagan suggested animating the fire somehow to give it a glow. The gears started turning in my head.

I remembered an old Canvas Cycle demo by Joseph Huckaby (2010). Using Canvas, Huckaby was able to recreate the retro color cycle animations commonly used in 8-bit games. It was a way to programatically make 256-color environmental art come alive without adding another frame to the image (like a GIF). But as games got more colors, more pixels, and more dimensions; this technique fell by the wayside. Huckaby has a great write-up about his Canvas-based Color Cycling technique over on the EffectGames blog.

The source code for the Canvas Cycle demo is available but it’s a bit old in web terms, over 100k, and our image wasn’t 8-bit; so I’d have to roll my own way of doing things…

Then a Prototype

The first thing I had to prove was that I could even do this myself. The “MVP” was simple: Could I grab a color from an image and use Canvas to manipulate a single color?

As I thought about the problem, I realized my old friend star-power Mario is probably the best reduced test case of what I want to achieve. So you know I started with a prototype…

CONTENT WARNING: SEIZURES.

See the Pen [WARNING: Seizures] by Dave Rupert (@davatron5000) on CodePen.

Success! I can cycle Mario’s outfit. The code in here is a HueShift() method I stole from StackOverflow. HueShift() loops through pixel using a giant array of Canvas ImageData. It converts the RGBA value to HSL, checks if the Hue (of the Hue-Saturation-Lightness) is within the range of hues we want to shift, then shifts the amount randomly. Then it takes all those shifted pixels and injects them back into the Canvas.

Sharing the Process

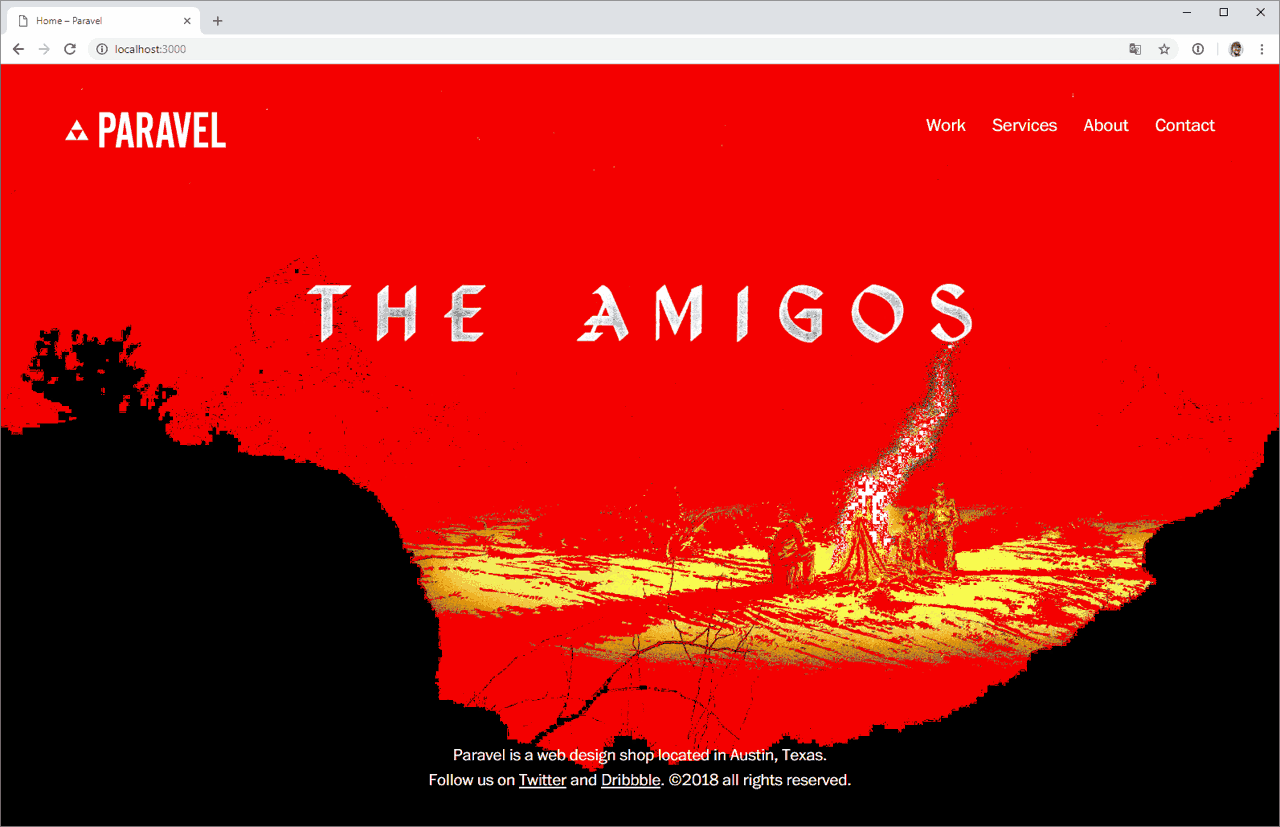

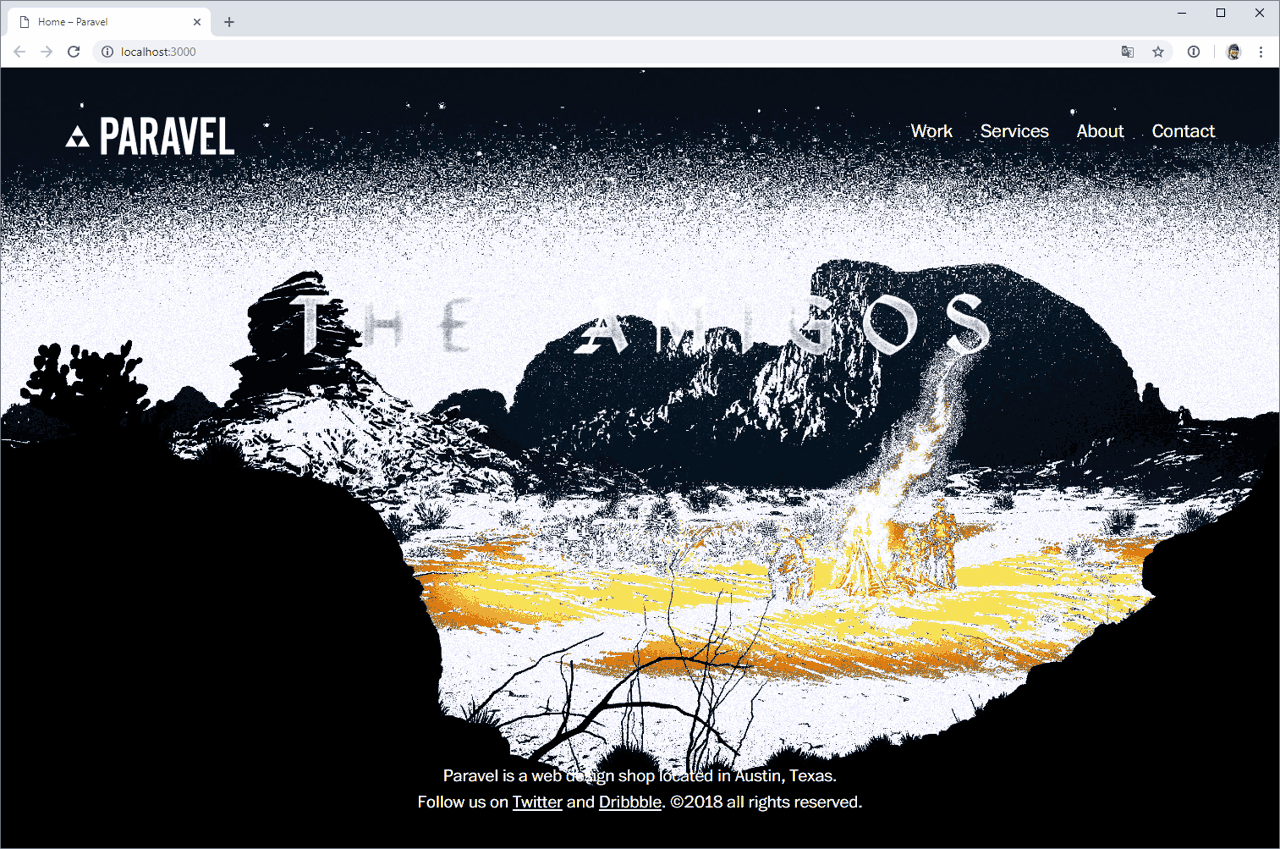

The next job was applying the color shift to the homepage illustration. The code from the CodePen mostly worked out of the box. Here are a few of the “happy accidents” I screengrabbed and shared on Slack while doing this.

While I’m able to cycle colors, it’s cycling every color. I needed a tighter scope on the colors I want to animate, so I adjusted some minHue and maxHue variables.

After some experimentaiton, I got the correct pixels cycling. Next, the color shift on the HSL color wheel was too aggressive, so I needed to dampen the randomized cycle amount.

It was still a process to get it right. At one point I made every animated pixel red so I could test my color scoping and things got downright post-apocalyptic.

And here’s me trying to animate the color white…

Here me out tho… this is pretty cool.

The important thing I want to stress isn’t that I was doing dumb shit in the browser, it was that I was sharing these videos and screenshots with my team in Slack. The whole way, sharing my progress and my grotesque failures. Unlocking how to programmatically mess with individual pixels in images in Canvas has a lot of potential, but casually keeping your coworkers abreast of your progress unlocks even more potential.

Don’t hoard the process.

Honing the Animation

One thing you can maybe get from the video is that the fire and the glow weren’t animating like.. well… fire. I’ve watched enough Coding Train to know the answer here is Perlin Noise! I felt like the coolest hipster coder dropping a 1D Perlin Noise generator and sequentially plucking a perfectly linearly interpreted noise value…

But it didn’t feel right. I did some research into how to animate fire. I came across a paper from Pixar Research about Wavelet Noise which differs from Perlin Noise in that it tries to emulate an ocean wave crashing. Rather than coding out my own Wavelet Noise generator, I just stole some point values from a wavelet noise chart I found.

const waveletNoise = [0, .1, -.2, -.5, -.8, -.2, .05, 1, .8, .5, 0];

Using this rudimentary array for my shift values, the pulse of the color shift felt a lot more like fire but was mechanical and overly sequenced like on those Canvas Cycle demos. So rather than returning to the 0 index in my waveletNoise, I cycled back to a random point in the array. This is embarrassingly cheap, but gives the desired effect.

Identifying Performance Problems

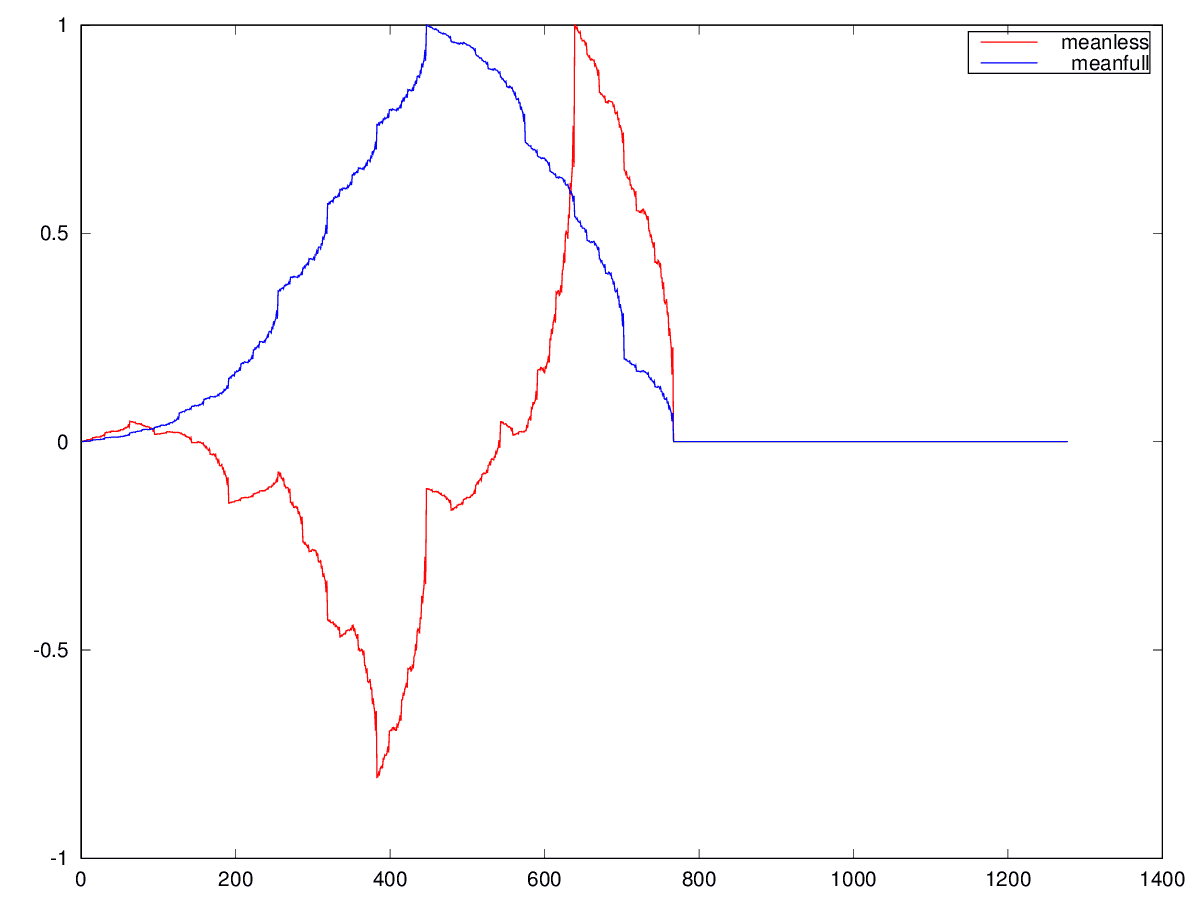

Based on the amount of noise coming from my computer fans, I knew we had performance problems. Even though I was using requestAnimationFrame, I was only getting a frame every 300ms instead of 16ms and there was a “Giant Yellow Slug” in the Chrome Dev Tools > Performance panel. I had completely jammed up the Main UI thread.

I eventually figured out that my performance problems were linear to the size of my image. Smaller images perform better than larger images. Less pixels better performance.

We’re serving a smaller 1200w image to phones but that big desktop homepage image is 2850x2250, that’s 6,412,500 pixels. As I said before, in order to achieve this hue-shifting effect, I need to loop through every pixel of that image. That ends up being ~26 million items in a for-loop because of the RGBA color channels in the ImageData. And I want to do that on every frame.

I was able to speed things up considerably by preprocessing the image and getting a list of pixels that needed to be shifted. But that number was still at ~320,000 pixels (1.2m length array).

I eventually abandoned that solution decided to try an old but new-to-me technology called Workers.

Refactoring as Necessary

Workers were designed for giant thread-blocking operations like these. They take code off of the Main UI thread and create a new thread for code to run in. Spinning up workers isn’t trivial work, there’s a delicate balance between “What are my expensive operations?” versus “Does this interact with the DOM/Canvas?” But I was able to break off the color shifting chunk of logic and split it into a Worker.

Here’s a truncated look at the approach I took for modifying my hue-shifting loop:

// main-ui.js

let shiftCycleCount = 0;

const shiftWorker = new Worker('shift-worker.js');

const shiftDampener = 40; // Need to dampen the shift amount.

function shift() {

// call worker

let shiftAmount = waveletNoise[shiftCycleCount] / shiftDampener;

shiftWorker.postMessage({

shiftAmount: shiftAmount,

minHue: minHue,

maxHue: maxHue,

originalData: originalData,

imgData: data

});

shiftCycleCount = shiftCycleCount + 1 === shiftArray.length ? Math.floor(Math.random() * shiftArray.length): shiftCycleCount + 1;

}

shift() then hands off a mountain of data to the shiftWorker using postMessage. The Worker looks like this…

// shift-worker.js

function rgbToHsl(r, g, b){ ... }

function hslToRgb(h, s, l){ ... }

function HueShift( shift, minHue, maxHue, originalData, data ){

for(var i=0;i<data.length;i+=4){

// rgb2hsl conversion, comparison, shifting, and hsl2rgb reconversion happens here.

}

return data;

}

self.onmessage = function (msg) {

var shiftedData = HueShift( msg.data.shiftAmount, msg.data.minHue, msg.data.maxHue, msg.data.originalData, msg.data.imgData );

self.postMessage({ result: shiftedData });

}

Feel free to check out our shift-worker.js. When shiftWorker is done with it’s mega operations it hands the result, a manipulated array of pixels, via postMessage back to the Main UI thread. The UI waits for the shiftWorker.onmessage event…

shiftWorker.onmessage = function(event) {

data.set( event.data.result );

ctx.putImageData( imgData, 0, 0);

requestAnimationFrame(shift);

};

The magic here was discovering data.set. I found this solution on a dark corner of the web, so it’s still a bit mysterious to me but set() is a Uint8ClampedArray prototype function we can use to directly set the Canvas ImageData to our new manipulated values where data = event.data.result was for some unknown reason unable to process that request.

I still have some baby yellow slugs in my Performance panel, but I think writing 6.5 million pixels to the canvas is probably CPU intensive. There is an upcoming Offscreen Canvas API (available in Canary) where Workers can update Canvas directly that aims to ease this bottleneck for Video and VR applications. That should help one day.

The 6.5 million pixel roundtrip takes ~200ms, resulting in ~5 FPS (on high-end desktop). That can be better, but luckily achieves a bit of that 8-bit color cycle feeling. Different browsers cycle at different speeds, but I’m comfortable with that.

Closing Thoughts

I’m happy with where the animation is currently at, but I’m sure I’ll keep iterating to see if I can optimize this further. It’d be great to have too many frames coming too quickly and have to throttle the rAF intentionally, rather than barely making the 5 FPS window when the Worker finishes. Probably the biggest lever I can pull is reducing the number of pixels I’m cycling through. This should give the performance gains I need.

Ideally browsers would have native rgb2hsl and hsl2rgb color functions. Seems like they should. Color manipulation and luminosity seem like basic web tech at this point.

Anyways, thanks for reading. If you got this far, you must really like animation or retro color cycling. If you’re a webperf nerd, feel free to trace and profile and offer suggestions! I’d love to know how it can be better.

Updates

I’ve been iterating a little on this based on feedback in the comments.

- Storing the image data in the Worker as opposed to passing it back and forth every time brought a 100% performance improvement. From 3.5 FPS to 7 FPS.

- Storing a list of

shiftablePixelsduring the first pass is saving us an order of magnitude in operation costs from 22 million to just 2 million items in our array. This was another big performance increase from ~50% increase from 7 FPS to 9 FPS, hitting upwards of 12 FPS.

Firefox and Chrome are now finishing the short loop in 80~90ms. Unfortunately, Edge’s Worker performance has tanked. Edge now take 10,000ms to do the first big loop and 4,200ms on each shorter loop. Needless to say, the effect is broken in Edge. I isolated it to the first change of storing the image data in the Worker. I think this might be due to Edge’s overall performance. I’m even seeing dramatic differences in timing the initial variable instantiation if-statement: Edge takes 250ms, Chrome 22ms, and Firefox 5ms. On subsequent loops, Firefox and Chrome rightly take 0ms, but Edge still takes 250ms.

Known Issues

- We’re using

object-fitto size and position the Canvas. Edge for whatever reason has decided to not allowobject-fiton Canvas elements, so Edge users (read: me) get a stretched background. - Edge’s worker performance is noticeably slower than Chrome with Firefox sitting somewhere in between.